Joyent Triton / SDC 7 Installation Notes

Here are some notes on my experience setting up a Joyent Triton SDC environment. The purpose of this exercise was to evaluate the stability and utility of the software as a replacement for some of the traditional/legacy virtualization options currently in use. I am working on a few experiments with microservice based software and wanted a way to run these little tiny apps with isolation but without needing the reources of a complete operating system (no, i really do NOT want a BIOS and an emulated floppy disk to run this API endpoint :-) As a long time FreeBSD user, I understandably like what jails and bhyve are able to do and they are definately a viable option. However, FreeBSD doesn't yet have a great mangement system supporting the jails + bhyve pattern so this is one reason I decided to give the Triton software a test drive.

I'm not a huge fan of current state traditional / mainstream virtualization. The standard VMware ESX like pattern solves a few problems in infrastructure such as:

- aggregation / consolidation and improved utilization

- improving time to provision

- mobility

While the above benefits are great, at the end of the day in a large enterprise shop, we still may have 50,000 operating systems to patch every week and each of these operating systems may have hundreds of packages, each of which may or may not be trusted depdending on which exploits are active at the time. Also, I might need to pay for licenses for each of these full operating system images - ugh! Wouldn't it be nice if we can start deploying our applications in environments that have less dependencies and smaller attack surfaces? Also, wouldn't it be nice if we could provision in containers that are much smaller (in RAM) than typical hypervisors provide today? I want the hypervisor to be open source and be developed using modern development practices - like using git for example. With the back doors found in Juniper's firmware recently and mystery as to how it actually got there, this type of concern is no longer hypothetical and/or reserved for the ultra-paranoid.

In my opinon, some of the best advancements in virtualization are embodied in both SmartOS/Triton and FreeBD's bhyve.

With bhyve, you get an open source and clean re-do of the legacy/monolithic hypervisor incumbents (e.g. VirtualBox, VMware, XEN, etc). The code is small and builds very quickly and there is even a Mac OSX port called xhyve, see xhyve on github which is already being adopted by developers to create lightweight sandboxes for testing and security applications. Perhaps SmartOS will support it someday too ?

Here's what building xhyve from source looks like on my laptop:

(mack@gorilla) x2 % git clone https://github.com/mist64/xhyve Cloning into 'xhyve'... remote: Counting objects: 488, done. remote: Total 488 (delta 0), reused 0 (delta 0), pack-reused 488 Receiving objects: 100% (488/488), 11.39 MiB | 8.80 MiB/s, done. Resolving deltas: 100% (267/267), done. Checking connectivity... done. (mack@gorilla) x2 % cd xhyve/ (mack@gorilla) xhyve % ls ./ .gitignore config.mk test/ xhyverun.sh* ../ Makefile include/ xhyve.1 .git/ README.md src/ xhyve_logo.png (mack@gorilla) xhyve % date ; make ; date Tue Mar 22 07:40:27 CDT 2016 cc src/vmm/x86.c cc src/vmm/vmm.c cc src/vmm/vmm_host.c cc src/vmm/vmm_mem.c cc src/vmm/vmm_lapic.c cc src/vmm/vmm_instruction_emul.c cc src/vmm/vmm_ioport.c cc src/vmm/vmm_callout.c cc src/vmm/vmm_stat.c cc src/vmm/vmm_util.c cc src/vmm/vmm_api.c cc src/vmm/intel/vmx.c cc src/vmm/intel/vmx_msr.c cc src/vmm/intel/vmcs.c cc src/vmm/io/vatpic.c cc src/vmm/io/vatpit.c cc src/vmm/io/vhpet.c cc src/vmm/io/vioapic.c cc src/vmm/io/vlapic.c cc src/vmm/io/vpmtmr.c cc src/vmm/io/vrtc.c cc src/acpitbl.c cc src/atkbdc.c cc src/block_if.c cc src/consport.c cc src/dbgport.c cc src/inout.c cc src/ioapic.c cc src/md5c.c cc src/mem.c cc src/mevent.c cc src/mptbl.c cc src/pci_ahci.c cc src/pci_emul.c cc src/pci_hostbridge.c cc src/pci_irq.c cc src/pci_lpc.c cc src/pci_uart.c cc src/pci_virtio_block.c cc src/pci_virtio_net_tap.c cc src/pci_virtio_net_vmnet.c cc src/pci_virtio_rnd.c cc src/pm.c cc src/post.c cc src/rtc.c cc src/smbiostbl.c cc src/task_switch.c cc src/uart_emul.c cc src/xhyve.c cc src/virtio.c cc src/xmsr.c cc src/firmware/kexec.c cc src/firmware/fbsd.c ld xhyve.sym dsym xhyve.dSYM strip xhyve Tue Mar 22 07:40:37 CDT 2016

Anyway - we will now get back on track with Triton/SDC :-)

Well it turns out that with Triton SDC, we can apparently have everything we want! I have been following the work done over at Joyent the last couple of years - some of it was for node.js and SmartOS and to say that I've drank the Kool-Aid is an understatment! The Joyent Triton (aka Smart Data Center - SDC 7) pretty much checks all the boxes I had in my "future of virtualization" wishlist. If you haven't heard Bryan Cantrill speak on this (or any subject) - go to you-tube and check out some of his evangelism - it's great.

To get started installing a private instance of Triton, I started with the following resources:

- Github Readme for SDC - https://github.com/joyent/sdc

- Triton Installation Guide - https://docs.joyent.com/private-cloud/install

- SDC Install Video on You-Tube - SDC 6.5.3 Install Process Demo by Joyent/Ryan Nelson

I'm collecting my notes and drawings and I will update this page over the course of the week with some information you might find useful.

Tips for a smooth install

- hardware - you need at least two nodes - a headnode and at least one compute node. Try to have a headnode with 64GB RAM (I used a 32GB system to start with and it worked but it was a little too tight) The headnode has a couple dozen virtual machines running on it which make SDC work which is why it requires more than 32GB of RAM

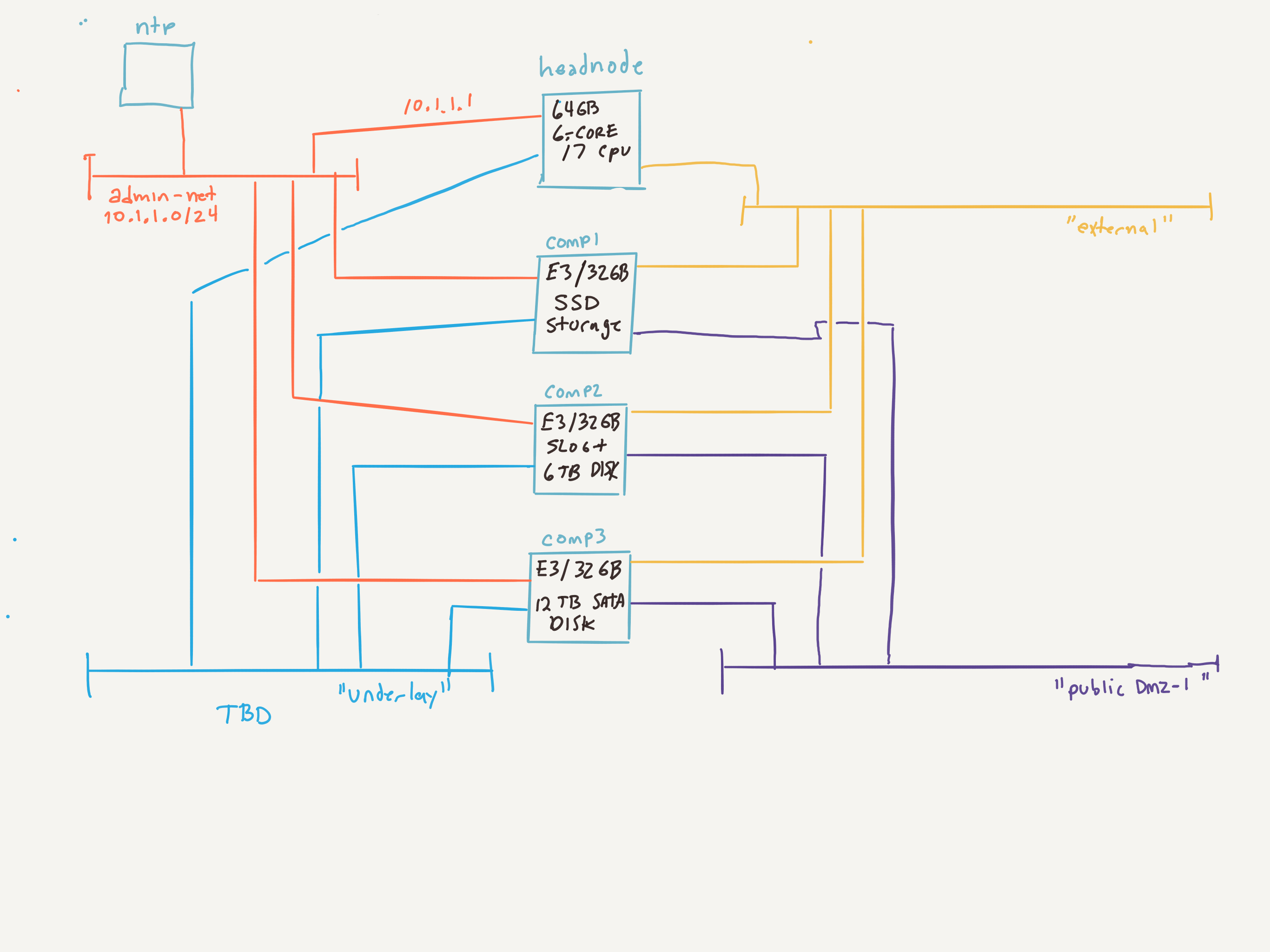

- plan ahead - draw a picture of your servers, admin net, public network, and other networks you plan to use.

- get the latest software:

% curl -C - -O https://us-east.manta.joyent.com/Joyent_Dev/public/SmartDataCenter/usb-latest.tgz

- ntp - this one was a bitch - if for some reason, the nodes are not able to synchronize their time over the internet, your compute node setup jobs will fail in mysterious ways and the error messages in the admin UI will not help you at all - I finally tracked it down by viewing error log files on the compute nodes in /tmp - drove me crazy actually but I'm better now. My solution was to use my own home NTP server that I connected to the admin network - then very important, make sure that it has a valid peer relationship established before setting up any compute nodes.

[root@headnode (level0) ~]# ntpq -c peer remote refid st t when poll reach delay offset jitter ============================================================================== *10.1.1.249 172.22.69.1 4 u 175 1024 377 0.311 6.825 0.008Make sure you have a little splat * before the remote time server and that the stratum is not 16. - implicit/automatic storage configuration - when installing triton nodes, all the disks are automatically configured into a single zpool named 'zones'. How the 'zones' zpool is configured depends on the number and type of disks available in the system. A smartos utility "disklayout" is used to do this, you can read that man page for more information.

- after you have the headnode installed I found it useful to

make the adminui available on the external network so I could

finish the installation on the couch instead of in the basement

DC. Luckily there is a one line command for this:

[root@headnode (level0) ~]# sdcadm post-setup common-external-nics Added external nic to adminui Added external nic to imgapi [root@headnode (level0) ~]# sdc-vmapi /vms?state=running | json -H -ga alias nics.0.ip nics.1.ip cnapi0 10.1.1.16 sapi0 10.1.1.26 dhcpd0 10.1.1.3 redis0 10.1.1.18 moray0 10.1.1.11 amonredis0 10.1.1.17 amon0 10.1.1.19 papi0 10.1.1.23 ca0 10.1.1.24 assets0 10.1.1.2 sdc0 10.1.1.22 ufds0 10.1.1.12 workflow0 10.1.1.13 manatee0 10.1.1.10 fwapi0 10.1.1.20 mahi0 10.1.1.27 imgapi0 10.1.1.15 192.168.88.102 adminui0 10.1.1.25 192.168.88.101 binder0 10.1.1.5 napi0 10.1.1.4 rabbitmq0 10.1.1.14 vmapi0 10.1.1.21

Here's a rough view of how everything is cabled together; my next step is to get the underlay network setup so I can test drive some of the networking features and docker patterns.

Here's a 64MB container running SmartOS:

% ssh epoch

__ . .

_| |_ | .-. . . .-. :--. |-

|_ _| ;| || |(.-' | | |

|__| `--' `-' `;-| `-' ' ' `-'

/ ; Instance (base 14.2.0)

`-' http://wiki.joyent.com/jpc2/SmartMachine+Base

[root@f0cd0e7d-7d73-ccbd-9b21-e7a69adcb9d2 ~]# sm-summary

* Gathering VM instance summary..

VM uuid ....

VM id 4

Hostname ....

SmartOS build joyent_20160317T000105Z

Image base 14.2.0

Base Image NA

Documentation http://wiki.joyent.com/jpc2/SmartMachine+Base

Pkgsrc http://pkgsrc.joyent.com/packages/SmartOS/2014Q2/i386/All

Processes 23

Memory (RSS) Cap 64M

Memory (RSS) Used 23M

Memory (RSS) Free 41M

Memory (Swap) Cap 256M

Memory (Swap) Used 35M

Memory (/tmp) Used 0M

Memory (Swap) Free 221M

Memory NOver Cap 0

Memory Total Pgout 0M

Disk Quota 26G

% Disk Used 2%

One month status update and upgrade

I am happy to report that my first month of running this cluster in light production mode has been a success. I have performed a couple reconfigurations / reinstalls and also performed an upgrade of all the services. Despite my relative lack of experience with SmartOS and Triton, I was able to manage most of those activitie using the documentation on the Joyent web site.

I was able to perform a rolling upgrade of all the nodes and services using the steps outlined in this script: SDC Upgrade Script. :

#!/bin/bash

set -o errexit

set -o xtrace

sdcadm self-update --allow-major-update --latest

sdcadm experimental update-gz-tools --latest

sdcadm experimental update-agents --latest --all -y

sdcadm experimental add-new-agent-svcs

sdcadm experimental update-other

sdcadm update --all -y

sdcadm platform install --latest

sdcadm platform assign --latest --all

new_pi=$(sdcadm platform list -j | json -a -c 'latest==true' version)

sdcadm platform set-default "$new_pi"

sdc-oneachnode -a 'sdc-usbkey update'

echo "Update of all components complete"

now=$(date +%Y%m%dT%H%M%SZ)

mkdir -p /opt/custom

touch "/opt/custom/core_services_${now}.json"

for uuid in $(vmadm lookup | sort); do

vmadm get $uuid >> "/opt/custom/core_services_${now}.json"

done

exit

I went through each step above manually instead of yolo-ing with the script just in case it did something that didn't make sense. A couple of notes:

[root@headnode (level0) ~]# sdc-oneachnode -a 'sdc-usbkey update'

=== Output from 00000000-0000-0000-0000-0cc47a7a30ca (compute1):

sdc-usbkey: error: no pcfs devices found

=== Output from 00000000-0000-0000-0000-0cc47a7b46b4 (compute3):

sdc-usbkey: error: no pcfs devices found

=== Output from a93b6ca9-1f6e-11e2-a6d3-643150437bc1 (headnode):

update file "boot/ipxe.lkrn"

old shasum: a5dde94654c432196f3cfd1e9bf9e6e5b7663aaa

new shasum: 40bee0f6284231424fd8011a9f8405fd899d8094

Note that the usb-key updates failed on the two compute nodes but succeeded on the headnode. This is normal since the compute nodes do not have usb keys on them since they always boot over PXE.

Also, once the script was run, I used the adminui web GUI to manually reboot each node in the cluster, starting with the headnode, making sure that each node returned to normal before moving on to the next. At the end of the process, all my nodes were at the most recent release:

HOSTNAME STATUS compute1 SunOS compute1 5.11 joyent_20160427T114319Z i86pc i386 i86pc compute3 SunOS compute3 5.11 joyent_20160427T114319Z i86pc i386 i86pc headnode SunOS headnode 5.11 joyent_20160427T114319Z i86pc i386 i86pc

Anyway, hope you find this useful.